The Unexamined System

We instrument our servers with more care than we instrument ourselves. On observability, introspection, and the dashboard we are afraid to build.

The pager went off at 2:47 a.m. on a Tuesday. I was asleep, and then I was not. The phone on my nightstand was vibrating with the particular insistence that on-call engineers learn to distinguish, even in dreams, from every other kind of notification. I picked it up, opened the alert, and within thirty seconds I was sitting at my desk with four browser tabs open: the metrics dashboard, the log aggregator, the trace viewer, and the service map.

I could see everything. CPU utilization across forty-two instances, plotted in fifteen-second intervals. Memory consumption, request latency at the 50th, 95th, and 99th percentiles. Error rates broken down by endpoint, by status code, by upstream dependency. I could click on any single request that had failed and trace its journey through eleven services, seeing exactly where it had stalled, for how long, and why. The system was exposed to me with a transparency that bordered on the obscene. Every heartbeat, every hiccup, every tremor in its vast and intricate nervous system was recorded, indexed, and displayed in real time on a screen in my bedroom.

I found the problem in fourteen minutes. A connection pool was exhausted because a downstream service had increased its response time by forty milliseconds — enough to cause requests to queue, enough for the queue to grow, enough for the pool to drain. I adjusted a timeout, the queue cleared, the metrics recovered, and the system returned to health. I closed the laptop.

And then I lay in the dark, heart still pounding from the adrenaline, and I noticed something that I have not been able to stop thinking about since. I had just diagnosed, in fourteen minutes, a subtle failure in a distributed system of extraordinary complexity. But I could not tell you why my heart was pounding harder than the incident warranted. I could not tell you why I had snapped at my partner earlier that evening over something trivial. I could not tell you what, precisely, I was afraid of — not the alert, something older, something underneath — that had been sitting in my chest for days like a weight I could not name.

I had no dashboard for any of it. No metrics. No logs. No traces. The most complex system I will ever encounter — my own mind — was running unmonitored, uninstrumented, in production, with no alerting and no observability, and I had been treating this as normal.

The three pillars and the one mirror

In software engineering, we talk about the "three pillars of observability": metrics, logs, and traces. Each captures a different dimension of a system's behavior. Metrics are numbers that change over time — request count, error rate, memory usage. They tell you the shape of what is happening, aggregated and compressed. Logs are discrete events — timestamped records of specific things that occurred. They tell you what happened, in sequence, with context. Traces follow a single request through the system end to end, linking the events across services into a narrative. They tell you how something happened, step by step, from cause to consequence.

Together, the three pillars give you something that no single one provides alone: the ability to ask questions you didn't anticipate. This is the distinction that Charity Majors, one of the clearest thinkers on the subject, draws between monitoring and observability. Monitoring is knowing what to check. You define the alerts in advance: if CPU exceeds 80%, page someone. If error rate exceeds 1%, page someone. Monitoring answers known questions.

Observability is the ability to ask new questions — questions you have never thought to ask before, prompted by behavior you have never seen before. It is the ability to explore the system's state from the outside, to follow threads of causation through unfamiliar territory, to understand failures that no one predicted. Monitoring tells you that something is wrong. Observability lets you understand why.

Now consider: what would the three pillars look like for a human mind?

Metrics would be the quantitative dimensions of your inner life. Heart rate, yes, but also: how many times today did your attention wander during a conversation? What is your running average of sleep quality this week? How long has it been since you felt genuine curiosity, as opposed to mere interest? What is the latency between a stimulus — someone criticizing your work, say — and your response? Is that latency increasing or decreasing over the years?

Logs would be the discrete events. The moment at 3:15 p.m. when you felt a flash of resentment toward a colleague and suppressed it. The dream you had last night that you've already half-forgotten. The sentence you read this morning that made you stop and reread it, though you couldn't say why. The micro-expression on your child's face that you noticed but did not respond to. Each one a timestamped entry in a record that no one is keeping.

Traces would follow a single thought or emotion from its origin to its expression. The irritation that began as a tightness in your shoulders at 9 a.m., became a short tone with your partner at noon, became an unkind thought about a stranger on the train at 5 p.m., and finally emerged as insomnia at midnight — a single trace through the distributed system of your psyche, connecting events that seem unrelated until you see the thread that runs through them.

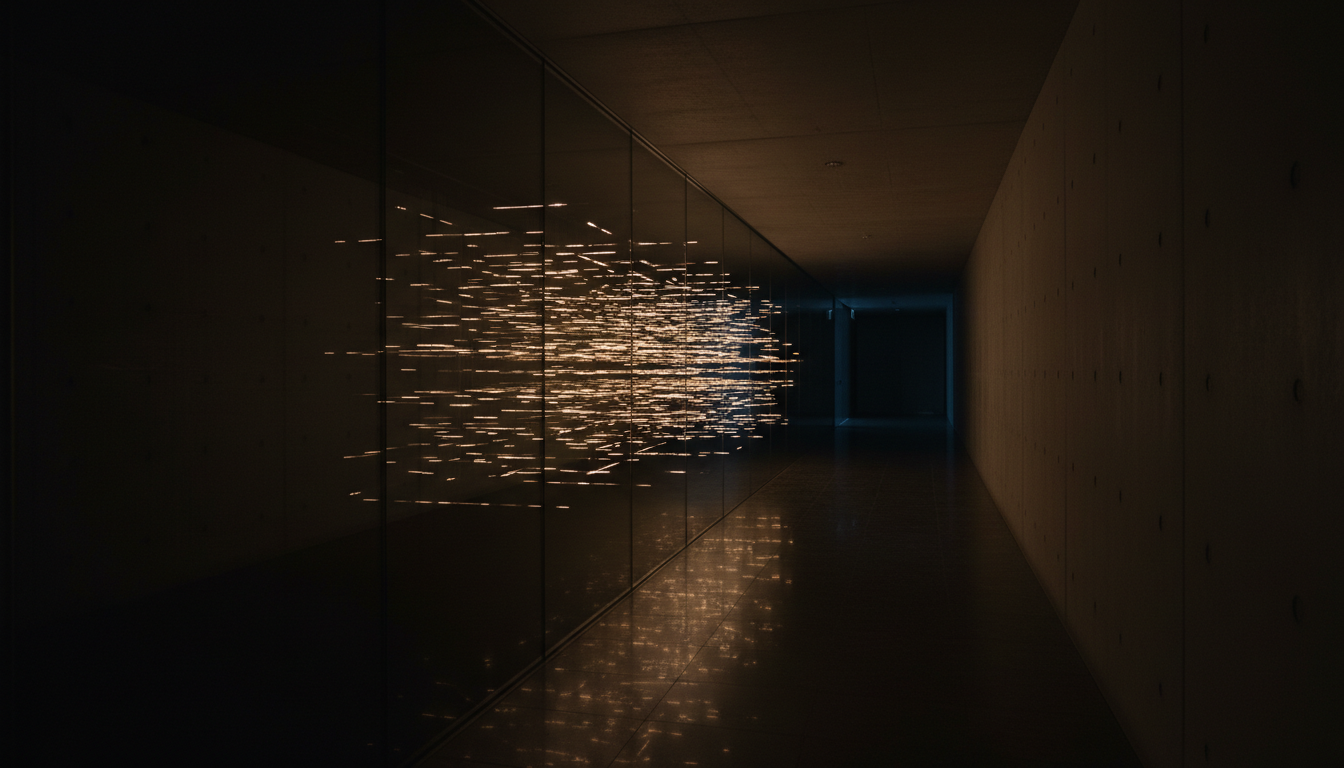

We have none of this. We operate in the dark.

The oldest monitoring framework

Except that we do have it. We have had it for twenty-five hundred years.

In the fifth century BCE, the Buddha delivered a discourse called the Satipatthana Sutta — the "Foundations of Mindfulness" — that is, if you read it with an engineer's eye, a monitoring framework for the human mind. It specifies four domains of observation: the body (kaya), feelings (vedana), mind states (citta), and mental phenomena (dhamma). For each domain, it prescribes a practice of continuous, non-reactive observation. Watch the breath. Note the sensations. Observe the arising and passing of emotions. Record the quality of your attention itself.

The language is different. The cultural context is unrecognizably distant. But the structure is identical to what we build for our infrastructure. Define the signals. Instrument the system. Observe without intervening. Build a model of how the system behaves, so that when something goes wrong, you have the context to understand why.

The Buddha was not the only one to build such a framework. The Stoics had their prosoche — the practice of continuous self-attention that Epictetus and Marcus Aurelius wrote about with the precision of engineers documenting a protocol. "Keep watch over yourself as though you were your own enemy," Epictetus wrote, "lying in wait to ambush you." This is alerting. This is monitoring with the threshold set to zero.

Ignatius of Loyola developed the Examen — a twice-daily practice of reviewing the movements of the soul, noting where consolation and desolation arose, tracking patterns over time. It is, in contemporary terms, a log review. A daily standup with your own psyche.

William James, the father of American psychology, spent years attempting to build a science of introspection — a rigorous, systematic method for observing the mind from the inside. He failed, or believed he failed, and his failure haunted him. "Introspective observation is what we have to rely on first and foremost and always," he wrote in The Principles of Psychology. "The word introspection need hardly be defined — it means, of course, the looking into our own minds and reporting what we there discover. Everyone agrees that we there discover states of consciousness."

But James also understood the fundamental problem, and it is the same problem that every contemplative tradition has grappled with, and it is the reason we do not monitor our minds with the same rigor with which we monitor our servers.

The observer problem, again

In infrastructure monitoring, there is a clean separation between the observer and the observed. The monitoring agent runs on the system, yes, but it is a separate process. It watches the application from outside. It has its own lifecycle, its own resources, its own perspective. When I open Grafana at 2:47 a.m., I am not part of the system I am diagnosing. I am outside it, looking in, and this separation is what makes diagnosis possible.

In the mind, there is no such separation.

When you attempt to observe your own anxiety, who is doing the observing? The anxious mind. When you try to trace the origin of your irritation, the instrument you are using to trace is the same instrument that is irritated. The observer and the observed are the same system. There is no external monitoring agent. There is no Grafana for the soul.

This is not merely an inconvenience. It is a fundamental architectural problem. In systems engineering, we know that a monitoring system that shares resources with the application it monitors will produce unreliable data under load — precisely when you need it most. When the system is healthy, the monitor works fine. When the system is failing, the monitor fails too, because it is running on the same failing hardware.

The mind works exactly this way. When you are calm, introspection is easy. You can observe your thoughts with clarity, note your emotional state with precision, trace the origins of your feelings with something approaching objectivity. But when you are in crisis — when you are angry, or afraid, or depressed, or caught in a spiral of anxiety — the instrument of observation degrades along with the system it is trying to observe. You cannot clearly see your own panic while you are panicking. You cannot rationally analyze your irrationality while you are being irrational. The monitoring system and the application are running on the same hardware, and when the hardware fails, everything fails together.

This is why the contemplative traditions emphasize practice during calm times. The Buddhist meditator sits on a cushion in a quiet room, not because the cushion is where the insights are needed, but because the cushion is where the monitoring instrument can be calibrated. You practice observing your mind when it is healthy so that the skill is available — cached, automatic, moved from the prefrontal cortex to the basal ganglia — when the mind is in crisis. You are training the observer to function even when the system is under load.

The dashboard we refuse to build

But there is another reason we do not monitor our minds, and it is simpler and more uncomfortable than the observer problem.

We do not want to see the data.

I have worked at companies where a team resisted adding monitoring to a service — not because it was technically difficult, but because they suspected the data would be embarrassing. They knew, at some level below articulation, that the service was slow. That the error rate was higher than it should be. That the architecture had problems they had been avoiding. The dashboard would make the problems visible, and visible problems demand action, and action is expensive, and they were already stretched thin.

So they did not build the dashboard. And the service continued to run, mostly fine, occasionally failing in ways that nobody could diagnose because there was no observability, and each failure was treated as an isolated incident rather than a symptom of a systemic problem, because without data, there is no system — only a series of surprises.

This is precisely how most of us relate to our own minds. We suspect, at some level, that the data would be uncomfortable. That if we actually tracked our emotional responses with the fidelity of a metrics dashboard, we would see patterns we would rather not see. That we are more anxious than we admit. That we are less kind than we believe. That the anger we treat as justified is more frequent and less rational than we imagine. That the relationship we describe as "fine" has a latency problem that is slowly degrading the connection.

The unmonitored mind is comfortable in the way that a service without dashboards is comfortable. You don't know what you don't measure. And what you don't measure can't disappoint you. The ignorance is not bliss, exactly — there is a low-grade unease, the same unease that an engineer feels about a service they cannot see into — but it is easier than the alternative. The alternative is the dashboard. The alternative is seeing yourself in high resolution.

Socrates said that the unexamined life is not worth living. This is one of the most quoted sentences in philosophy, and it is also one of the most ignored. We quote it and we do not practice it. We admire the sentiment and we do not build the dashboard. Because Socrates was also executed for his habit of examination — for asking people questions that forced them to see the gap between what they believed about themselves and what was actually true. The examined life is not just more worth living. It is more painful to live. And most of us, given the choice, will choose comfort over clarity.

What the traces would show

I want to be specific about what frightens me, because I think specificity is the only antidote to the vagueness that lets us avoid this.

If I had traces for my mind — if I could click on a moment of anger and follow it backward through the system to its origin — I suspect I would find that most of my emotional responses are not responses to the present moment at all. They are cached responses. Patterns learned in childhood, triggered by surface similarities to situations that no longer exist. The irritation I feel when someone questions my work is not a response to this person, in this room, asking this question. It is a trace that begins somewhere in my past, passes through decades of accumulated experience, and arrives in the present wearing the mask of the current situation.

A good observability tool would show me this. It would show me the full trace — not just the final event (the sharp word, the clenched jaw) but the entire chain of causation. The original wound. The pattern of reinforcement. The hundreds of times the same trace has fired, each time strengthening the pathway, each time making the cached response faster and more automatic and less visible to the consciousness that is producing it.

Eugene Gendlin, the philosopher and psychotherapist, developed a practice called "Focusing" that is, I think, the closest thing we have to a trace viewer for the mind. Gendlin noticed that the clients who made progress in therapy were not the ones who talked the most or analyzed the most or understood the most. They were the ones who could pause and attend to what he called the "felt sense" — a bodily, pre-verbal, holistic awareness of a situation that is more than any description of it. The felt sense is not an emotion. It is not a thought. It is the body's total response to a situation, before the mind has categorized it into familiar labels.

Gendlin's Focusing practice asks you to sit with the felt sense without naming it, without analyzing it, without trying to fix it. Just to observe it. To give it space. And then, slowly, to let it articulate itself — not in the language of the prefrontal cortex, but in its own language, which is felt and physical and often surprising. The trace reveals itself not through analysis but through attention.

This is observability in its purest form. Not monitoring — not checking a known metric against a known threshold. Observability: the ability to ask a question you have never asked before, about a state you have never encountered before, and to follow the answer wherever it leads.

The service that monitors itself

Here is what I have come to believe, sitting in the dark at 3 a.m. with a laptop closed and a heart still pounding.

We instrument our servers because we accept that complex systems will fail in ways we cannot predict. We accept that the system is too large, too interconnected, too emergent for any single person to hold a complete model of it in their mind. We accept that without observability, we are blind — that we will be surprised, and that surprises in production are expensive.

But we do not extend this same humility to ourselves. We assume that we understand our own minds. That we know why we feel what we feel. That our self-narrative — the story we tell about who we are and why we do what we do — is accurate. We treat introspection as trivial, as something anyone can do without training, as though the most complex system in the known universe can be understood by casual inspection.

This is the same arrogance that a team displays when they say, "We don't need monitoring — we know how the system works." They are always wrong. The system is always more complex than they think. And the failures always come from the places they were not watching.

I do not have a solution. I do not have a dashboard to offer you, or a practice that will make the interior life as legible as a Grafana panel. The observer problem is real. The discomfort is real. The mind's resistance to seeing itself clearly is not a bug but a deeply evolved feature, a defense mechanism against the vertigo of genuine self-knowledge.

But I think the first step is the same first step we take with any unmonitored system. You admit that you are blind. You acknowledge that the stories you tell about yourself are approximations at best and confabulations at worst. You accept that you do not know why you do what you do, not really, not with the precision you would demand of any system you were responsible for.

And then you sit. In the quiet. And you watch. Not with judgment — judgment is the mind monitoring itself and issuing alerts, which is a different thing entirely. With attention. Patient, non-reactive, curious attention. The kind of attention that an engineer brings to a system they are trying to understand for the first time. The kind that says: I don't know what this system does. I don't know how it fails. But I am going to watch it, carefully, for a long time, and I am going to let it show me.

The monks have been doing this for twenty-five hundred years. The engineers have been doing it for twenty-five. The tools are different. The practice is the same.

Observe the system. Instrument yourself. Build the dashboard you are afraid to see.

The pager will go off eventually. It always does. And when it does, you will want to have been watching.

If this resonated with you

These essays take time to research and write. If something here changed how you see, consider supporting this work.

Support this work